Humans have been attempting to teach computers to read their emotions since the 1990s, when MIT professor Rosalind W. Picard founded the field of affective computing.

Almost 30 years later, affective computing technologies are starting to appear in the commercial mainstream. These include the Microsoft Emotion API, which analyzes facial expressions to detect a range of feelings, and Affectiva’s Emotion Speech API, which identifies emotion in pre-recorded audio segments.

These technologies may have particular significance for individuals with autism spectrum disorder (ASD), who often struggle with social interaction and communication.

The potential is obvious: If smartphone apps could provide unobtrusive emotional cues in real time, they could be used by individuals with ASD to facilitate social interactions.

Facial expression analysis could provide real-time feedback to those with ASD on the emotional state of people they’re having conversations with.

And wearable physiological sensors — such as the biomusic technology I develop — could provide individuals with ASD moment-to-moment insights on other people’s emotional reactions.

Music of the nervous system

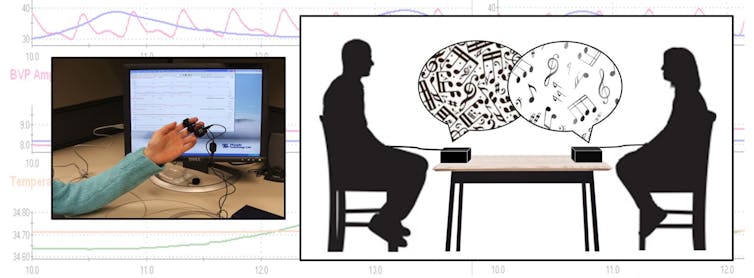

I lead a team that is actively developing a technology called “biomusic” that translates physiological signals into musical output.

The technology collects autonomic nervous system signals — such as the amount of sweat in the skin and heart rate — through a wearable sensor. It maps features of these signals to sounds, generated and synthesized in real time.

Author provided

Since autonomic nervous system signals change with emotional state, changes in biomusic can reflect increases in anxiety. They can also reflect recognition of another person.

In one of our pilot studies, for example, we demonstrated that the autonomic nervous system signals of children in complex continuing care changed in the presence of therapeutic clowns, even when there was no other evidence of recognition or responsiveness.

My initial research demonstrated that playing the biomusic of a child with profound multiple disabilities improved their caregiver’s interactions with, and perceptions of, them.

Risks of ‘designing for autism’

When the autistic community began expressing an interest in using biomusic for individuals with ASD, I struggled to find a way to tailor the technology for their needs. And to do it without falling into the trap of “designing for autism.”

Those in the autistic community have pushed back against the generalizations of the autistic condition. Many are fond of the saying — “If you’ve met one person with autism, you’ve met one person with autism” — to ward off well-intentioned but ill-informed designs based on preconceived assumptions about ASD.

(Shutterstock)

Other problems can arise in the practice of “designing for autism.” Technologies designed for a specific (dis)ability run the risk of becoming stigmatizing, even if they solve a real problem.

The ethical questions that accompany all affective technologies — for example questions about how to maintain privacy — become much bigger for autistic individuals, who often struggle with emotional expression and awareness.

Stories of inclusion and exclusion

In efforts co-led by medical anthropologist Melissa Park and art historian Tamar Tembeck, we sought out individuals for guidance. This included persons on the spectrum, family members, educators and employers of people on the spectrum, academics and members of the tech industry.

Our group of 30 people came together for a three-day workshop in Montréal to discuss how to effectively meld biomusic and autism. During the workshop, we engaged with biomusic in various ways to explore its potential opportunities, uses and misuses.

We walked through a fine arts museum while broadcasting our real-time biomusic to gain an understanding of what it was like to have strangers aware of our internal reactions.

We observed a dancer, Erin Flynn, dancing to her own biomusic, which allowed us to see the connection between her movement and the music — and to juxtapose the stillness of her body with the effort revealed by the intensity of her biomusic.

We listened to stories of inclusion and exclusion of people with ASD from our community partners, and lectures on theoretical frameworks for biomusic technology from our academic partners.

Over the three days, our group struggled through ethical, aesthetic and practical issues in the interface of biomusic and autism, and debated ways to address these concerns in future iterations of the technology.

Encoding sound for privacy

The discussions have profoundly shaped my team’s approach to designing biomusic technology. The anecdotes and stories shared by community members sparked ideas on how to overcome some nagging technical challenges.

Author provided

For example, our approach to issues of privacy shifted when we heard an individual with ASD describe the 20 different sounds she heard in a train engine when neurotypical individuals —those who aren’t on the spectrum —were only hearing two.

As a result of this story, we started designing auditory displays to be used for bio-feedback in which critical information about emotional changes were encoded to be imperceptible to neurotypical people.

A set of critical academic talks also inspired my team to design ways in which neurotypical people and those with ASD could experience their biomusic together.

A collaborative design process

This avenue of thinking has not only allowed us to explore potential new forms of interpersonal interaction, it has allowed us to further our understanding of how to reduce the stigma associated with using our assistive technology.

Formal and informal workshop discussions about the nature of emotion, from the perspective of those with ASD, steered us to design biomusic’s user interface so that the process of labelling an emotion was both collaborative and contextual.

These design decisions not only improve the information that biomusic receives about a user’s emotional state, they address more broadly the challenge in affective computing of gathering reliable subjective report of an individual’s moment-to-moment emotional state.

Navigating the dangers of “designing for autism” becomes possible when designers create ways for individuals with autism, and those who work and live with them, to become deeply engaged in the design process.

This practice increases the acceptance of the final technology in the autism community. It also results in innovative design ideas and implementations that can benefit individuals across the spectrum of neurodiversity.

![]()

Stefanie Blain-Moraes receives funding from the Natural Science and Engineering Research Council (NSERC), the Canadian Institute of Health Research (CIHR), the Social Science and Humanities Research Council (SSHRC) and the Fonds de Recherche Nature et technologies Quebec.